-

2023/11/29/用户新增预测挑战赛-模型交叉验证/

任务2.2 模型交叉验证

# 导入库

import pandas as pd

import numpy as np

import matplotlib as plt

import seaborn as sns# 读取训练集和测试集文件

train_data = pd.read_csv('用户新增预测挑战赛公开数据/train.csv')

test_data = pd.read_csv('用户新增预测挑战赛公开数据/test.csv')

# 提取udmap特征,人工进行onehot

def udmap_onethot(d):

v = np.zeros(9)

if d == 'unknown':

return v

d = eval(d)

for i in range(1, 10):

if 'key' + str(i) in d:

v[i-1] = d['key' + str(i)]

return v

train_udmap_df = pd.DataFrame(np.vstack(train_data['udmap'].apply(udmap_onethot)))

test_udmap_df = pd.DataFrame(np.vstack(test_data['udmap'].apply(udmap_onethot)))

train_udmap_df.columns = ['key' + str(i) for i in range(1, 10)]

test_udmap_df.columns = ['key' + str(i) for i in range(1, 10)]

# 编码udmap是否为空

train_data['udmap_isunknown'] = (train_data['udmap'] == 'unknown').astype(int)

test_data['udmap_isunknown'] = (test_data['udmap'] == 'unknown').astype(int)

# udmap特征和原始数据拼接

train_data = pd.concat([train_data, train_udmap_df], axis=1)

test_data = pd.concat([test_data, test_udmap_df], axis=1)

# 提取eid的频次特征

train_data['eid_freq'] = train_data['eid'].map(train_data['eid'].value_counts())

test_data['eid_freq'] = test_data['eid'].map(train_data['eid'].value_counts())

# 提取eid的标签特征

train_data['eid_mean'] = train_data['eid'].map(train_data.groupby('eid')['target'].mean())

test_data['eid_mean'] = test_data['eid'].map(train_data.groupby('eid')['target'].mean())

# 提取时间戳

train_data['common_ts'] = pd.to_datetime(train_data['common_ts'], unit='ms')

test_data['common_ts'] = pd.to_datetime(test_data['common_ts'], unit='ms')

train_data['common_ts_hour'] = train_data['common_ts'].dt.hour

test_data['common_ts_hour'] = test_data['common_ts'].dt.hour# 导入模型

from sklearn.linear_model import SGDClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.naive_bayes import MultinomialNB

from sklearn.ensemble import RandomForestClassifier

# 导入交叉验证和评价指标

from sklearn.model_selection import cross_val_predict

from sklearn.metrics import classification_report

# 训练并验证SGDClassifier

pred = cross_val_predict(

SGDClassifier(max_iter=1000, early_stopping=True),

train_data.drop(['udmap', 'common_ts', 'uuid', 'target'], axis=1),

train_data['target']

)

print(classification_report(train_data['target'], pred, digits=3))

# 训练并验证DecisionTreeClassifier

pred = cross_val_predict(

DecisionTreeClassifier(),

train_data.drop(['udmap', 'common_ts', 'uuid', 'target'], axis=1),

train_data['target']

)

print(classification_report(train_data['target'], pred, digits=3))

# 训练并验证MultinomialNB

pred = cross_val_predict(

MultinomialNB(),

train_data.drop(['udmap', 'common_ts', 'uuid', 'target'], axis=1),

train_data['target']

)

print(classification_report(train_data['target'], pred, digits=3))

# 训练并验证RandomForestClassifier

pred = cross_val_predict(

RandomForestClassifier(n_estimators=5),

train_data.drop(['udmap', 'common_ts', 'uuid', 'target'], axis=1),

train_data['target']

)

print(classification_report(train_data['target'], pred, digits=3)) precision recall f1-score support

0 0.862 0.781 0.820 533155

1 0.151 0.238 0.185 87201

accuracy 0.704 620356

macro avg 0.507 0.510 0.502 620356

weighted avg 0.762 0.704 0.730 620356

precision recall f1-score support

0 0.934 0.940 0.937 533155

1 0.618 0.593 0.605 87201

accuracy 0.891 620356

macro avg 0.776 0.766 0.771 620356

weighted avg 0.889 0.891 0.890 620356

precision recall f1-score support

0 0.893 0.736 0.807 533155

1 0.221 0.458 0.298 87201

accuracy 0.697 620356

macro avg 0.557 0.597 0.552 620356

weighted avg 0.798 0.697 0.735 620356

precision recall f1-score support

0 0.921 0.955 0.937 533155

1 0.642 0.498 0.561 87201

accuracy 0.890 620356

macro avg 0.782 0.726 0.749 620356

weighted avg 0.882 0.890 0.885 620356

- 在上面模型中哪一个模型的macro F1效果最好,为什么这个模型效果最好?

树类模型效果都很好,决策树模型的效果最好。这是因为数据集非线性、较复杂、特征之间相关度不高,决策树更适合捕获复杂的决策边界。

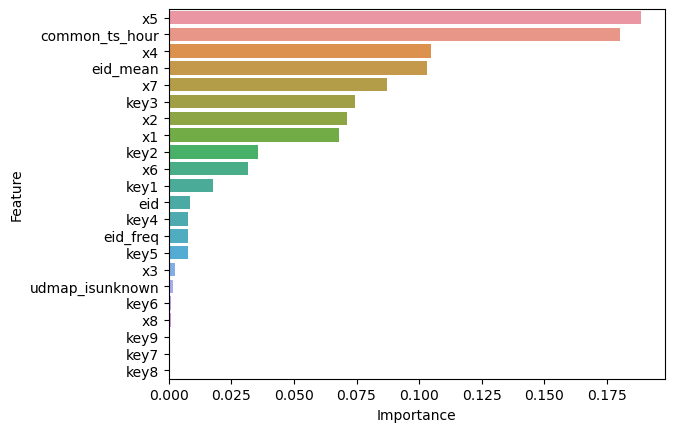

- 使用树模型训练,然后对特征重要性进行可视化;

# 准备特征矩阵 X 和目标向量 y

X = train_data.drop(['udmap', 'common_ts', 'uuid', 'target'], axis=1)

y = train_data['target']# 训练决策树模型

dt_model = DecisionTreeClassifier()

dt_model.fit(X, y)

# 获取特征重要性

feature_importance = dt_model.feature_importances_

# 创建一个包含特征名称和其重要性值的DataFrame

importance_df = pd.DataFrame({'Feature': X.columns, 'Importance': feature_importance})

# 按照重要性值降序排序

importance_df = importance_df.sort_values(by='Importance', ascending=False)

# 可视化特征重要性

sns.barplot(x='Importance', y='Feature', data=importance_df)

可以看出,对决策树模型来说,key6,key7,key8,x8均为重要性较低的特征。

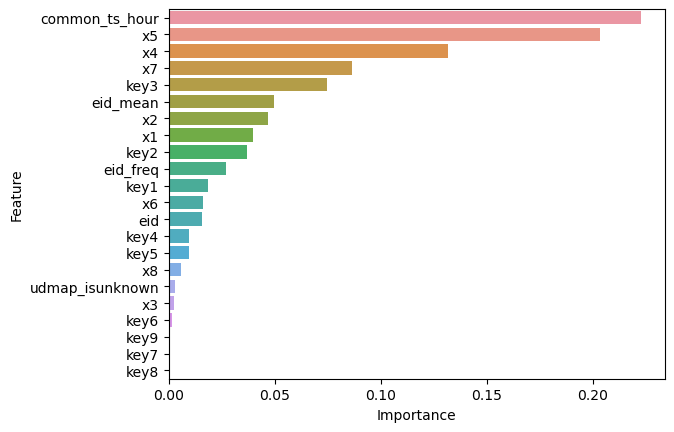

# 训练随机森林模型

rf_model = RandomForestClassifier(n_estimators=100, random_state=42)

rf_model.fit(X, y)

# 获取特征重要性

feature_importance = rf_model.feature_importances_

# 创建一个包含特征名称和其重要性值的DataFrame

importance_df = pd.DataFrame({'Feature': X.columns, 'Importance': feature_importance})

# 按照重要性值降序排序

importance_df = importance_df.sort_values(by='Importance', ascending=False)

# 可视化特征重要性

sns.barplot(x='Importance', y='Feature', data=importance_df)

可以看出,对于随机森林模型来说,key7,key8和key9重要性最低。

-再加入3个模型训练,对比模型精度

可以随手一试。

Author: Siyuan

URL: https://siyuan-zou.github.io/2023/11/29/%E7%94%A8%E6%88%B7%E6%96%B0%E5%A2%9E%E9%A2%84%E6%B5%8B%E6%8C%91%E6%88%98%E8%B5%9B-%E6%A8%A1%E5%9E%8B%E4%BA%A4%E5%8F%89%E9%AA%8C%E8%AF%81/

This work is licensed under a CC BY-SA 4.0 .